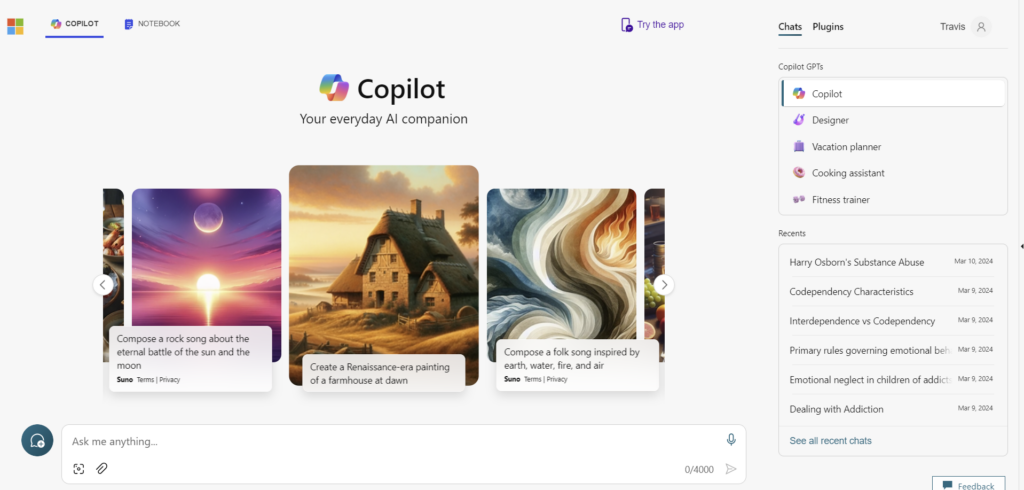

If you’ve been using tools like OpenAI’s ChatGPT, Google’s Bard/Gemini or Microsoft Copilot, you may have asked the AI bot sensitive questions about events happening in your life, from drug abuse to a failing relationship. Either way, these are sensitive subjects, and shouldn’t be able to be viewed easily through history. While Google provides an option to outright disable keeping logs of chats, Microsoft doesn’t. There are no settings to disable the history, prominent display of recent topics, or even to lock history until verified (either through sign in or 2FA).

I discovered this when I was using Microsoft Copilot to organize notes that I took for my college courses. While I know I don’t suffer from any substance abuse or relationship issues and most uses cases for this AI aren’t sensitive, a person who is in an abusive relationship, suffering from addiction or otherwise any sensitive subject is, and these topics shouldn’t be available so freely. Microsoft by displaying these so prominently can ruin these peoples lives by broadcasting these for anyone to see by not authenticating a user, or providing any setting to outright disable the history of said chats. There isn’t even an option to disable history in Microsoft’s own privacy section of your account!

👍👍

Thank you for this enlightening article.

This is very troubling, though I am not surprised the company founded by Bill Gates would have a hidden nefarious side to it. What do they do with all these collected logs? Though, to play devils advocate, just because the other AI programs delete the logs on the consumer facing side, do they delete them internally as well?

Interesting, good to know! Thank you!